Using Google Webmasters

You must sign in and have verified account of Google Webmasters, with this you can easily remove the any website or page which wrongly index in google.

- What you have to do is Login to google account.

- Go to http://google.com/webmasters

- If you are not signup then signup with your google email account, and verify your website, here is how to do this.

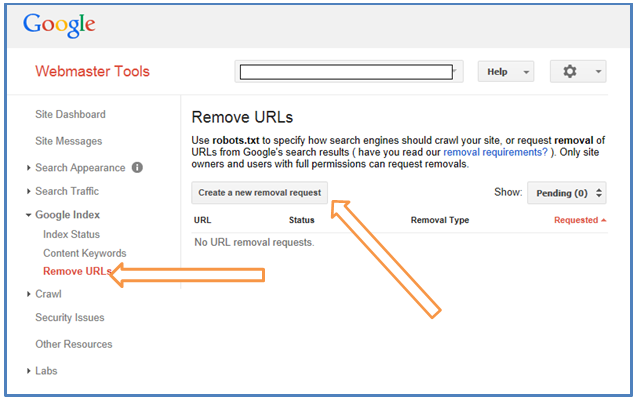

- On the Webmaster Tools home page, click the site you want.

- On the Dashboard, click Google Index on the left-hand menu.

- Click Remove URLs.

- Click New removal request.

- Type the URL of the page you want removed from search results (not the Google search results URL or cached page URL), and then click Continue.

- Click Yes, remove this page.

- Click Submit Request.

Using Robot.txt

The Disallow line lists the pages you want to block. You can list a specific URL or a pattern. The entry should begin with a forward slash (/).To block the entire site, use a forward slash. Disallow: /

To block a directory and everything in it, follow the directory name with a forward slash. Disallow: /junk-directory/

To block a page, list the page.

- Disallow: /private_file.html

To remove a specific image from Google Images, add the following: User-agent: Googlebot-Image

- Disallow: /images/dogs.jpg

To remove all images on your site from Google Images: User-agent: Googlebot-Image

- Disallow: /

To block files of a specific file type (for example, .gif), use the following: User-agent: Googlebot

- Disallow: /*.gif$

To counteract pages on your internet site from being crawled, while still presenting AdSense ads upon those pages, disallow all bots aside from Mediapartners-Google. This keeps the particular pages from appearing looking results, but allows the particular Mediapartners-Google robot to evaluate the pages to look for the ads to display. The Mediapartners-Google trading program doesn't share pages using the other Google user-agents. For example:

- User-agent: *

- Disallow: /

- User-agent: Mediapartners-Google

- Allow: /

Note that directives are case-sensitive. For instance, Disallow: /any_file.asp would block http://www.mysite.com/any_file.asp, but would allow http://www.mysite.com/any_file.asp. Googlebot will ignore white-space (in particular empty lines)and unknown directives in the robots.txt.

Here are some listed links which allows you to create robot.txt online.

- http://tools.seobook.com/robots-txt/generator/

- http://www.mcanerin.com/en/search-engine/robots-txt.asp

- http://www.robotstxt.org/robotstxt.html

These links allows you to create robots online, they are most useful tools

If you find any type of problem while doing all of that, inform or let discuss in comments.